We investigate particle filters for state estimation in the context of mobile robotics, people tracking and activity recognition. The animations below illustrate particle filters and their extensions. Just click on the images to get the animations. Have fun!

Quick links:

General techniques

Robotics (mapping, localization, tracking)

- Multi-robot exploration and map merging

- Rao-Blackwellised particle filters for object tracking

- Rao-Blackwellised particle filters for SLAM (map building)

- KLD-sampling: adaptive particle filters

- Sonar-based localization

- Landmark based localization for AIBO robots in RoboCup

- Multi-robot localization

- Vision-based localization

Activity recognition and people tracking

- Learning and inferring transportation routines from GPS

- Voronoi-tracking: graph-based particle filters

- WiFi-localization using Gaussian provcesses

- Rao-Blackwellised particle filters for people tracking

- JPDAF particle filters for people tracking

Click here to read a Belorussian translation.

WiFi-based people tracking using MCL and Gaussian Process sensor models.

This animation shows tracking of a person carrying a laptop measuring wireless signal strengths. The approach uses MCL to track a person's location on a graph structure, and Gaussian processes to model the signal strengths of access points.

See also:

- Projects web-site of the RSE-lab

- Gaussian Processes for Signal Strength-Based Location Estimation.

B. Ferris, D. Haehnel, and D. Fox. RSS-06. - WiFi-SLAM Using Gaussian Process Latent Variable Models.

B. Ferris, D. Fox, and N. Lawrence. IJCAI-07. - Large-Scale Localization from Wireless Signal Strength.

J. Letchner, D. Fox, and A. LaMarca. AAAI-05.

Global robot localization using sonar sensors

This example shows the ability of particle filters to represent the ambiguities occurring during global robot localization. The animation shows a series of sample sets (projected into 2D) generated during global localization using the robot's ring of 24 sonar sensors. The samples are shown in red and the sensor readings are plotted in blue. Notice that the robot is drawn at the estimated position, which is not the correct one in the beginning of the experiment.

- Monte carlo localization: Efficient position estimation for mobile robots.

D. Fox, W. Burgard, F. Dellaert, and S. Thrun. AAAI-99. - Particle filters for mobile robot localization.

D. Fox, W. Burgard, F. Dellaert, and S. Thrun. Sequential Monte Carlo Methods in Practice. Springer Verlag, New York, 2000.

KLD-sampling: Adaptive particle filters

In this experiment, we localized the robot globally in a map of the third floor of beautiful Sieg hall, our former Computer Science building. During localization, the sample set size is adapted by choosing the number of samples so that the sample-based approximation does not exceed a pre-specified error bound. The animation shows the sample sets during localization using sonar sensors (upper picture) and laser range-finders (lower picture). At each iteration, the robot is plotted at the position estimated from the most recent sample set. The blue lines indicate the sensor measurements (note the noise in the sonar readings!!). The number of samples is indicated in the lower left corner (we limited the number to 40,000). The time between updates of the animations is proportional to the time needed to update the sample set. In fact, the animations are much slower than the actual processing time (during tracking, a sample set is typically updated in less than 0.05 secs).

The animations illustrate that the approach is able to adjust the number of samples as the robot localizes itself. The number can also increase if the robot gets uncertain. Furthermore, the experiment shows that by simply using a more accurate sensor such as a laser range-finder, the approach automatically chooses less samples.

- Adapting the sample size in particle filters through KLD-sampling.

D. Fox. International Journal of Robotics Research IJRR, 2003. - KLD-Sampling: Adaptive Particle Filters.

D. Fox. NIPS-01.

People tracking using anonymous and id sensors.

Consider the task of tracking the location of people in an environment that is equipped with ceiling and wall-mounted infrared and ultrasound id sensors, as well as with laser range-finders. The id sensors only provide very coarse location information, while the laser range finders provide accurate location information but do not identify the people. This leads to two coupled data association problems. First, assign anonymous position measurements to the persons being tracked. Second, assign the ids to the persons being tracked based on the id measurements received. We apply Rao-Blackwellised particle filters for this purpose, where each particle describes a history of assignments along with the resulting trajectories of the people. Each particle consists of a bank of Kalman filters, one for each person.

The following animations show results with sensor data recorded at the Intel Research Lab in Seattle. The small blue dots show the end points of the two laser range-finders. The blue dots moving through free space indicate the presence of persons. Note that there are also several false alarms. The filled circles are used to visualize a measurement of an IR-sensor positioned at the center of the circle. The circles have a constant radius of 6.5m, which corresponds to the range up to which the IR-sensors are detecting signals. Ultrasound measurements are visualized using dashed circles, where the radius of the circle corresponds to the distance at which the receiver detected a person. In both cases, the color of the circle indicates the id of the person being detected. The colors of the circles indicate the uncertainty in the person's identity. After a burn in phase, both identities and track associations are sampled.

Raw data |

Most likely hypothesis. |

All hypotheses. |

- People Tracking with Anonymous and ID-sensors Using Rao-Blackwellised Particle Filters.

D. Schulz, D. Fox, and J. Hightower. IJCAI-03.

Rao-Blackwellised particle filters for object tracking

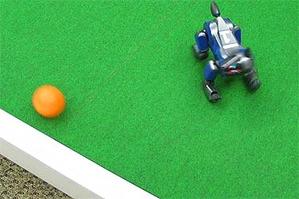

We developed Rao-Blackwellised particle filters for tracking an object and its interactions with the environment. To do so, the approach estimates the joint posterior over the robot location, the object location, and the object interactions. The video on the left shows the real robot, the animation on the right illustrates the Rao-Blackwellised estimate. Red circles are particles for the robot location, each white circle is a Kalman filter representing the ball's location and velocity. Landmark detections are indicated by colored circles on the field, see also our example of landmark based localization for AIBO robots below.

Real robot |

Sample-based belief |

- Map-based Multiple Model Tracking of a Moving Object.

C. Kwok and D. Fox. Robocup Symposium 2004. RoboCup Scientific Challenge Award.

Voronoi-tracking: people tracking with very sparse sensor data

Consider the task of tracking the location of a person using id sensor data provided by ultrasound and infrared badge systems installed throughout an environment (same as above, but without laser range-finders). Even though particle filters are well suited to represent the highly uncertain beliefs resulting from such noisy and sparse sensor data, they are not the most appropriate representation for this task. We estimate the location of a person by projecting the particles onto a Voronoi graph of the environment (see green lines in right picture). This representation allows more effiicient tracking and the probabilities of the transitions on the graph can be easily learned using EM, thereby adapting the tracking process to a specific user.

|

|

- Voronoi Tracking: Location Estimation Using Sparse and Noisy Sensor Data.

L. Liao, D. Fox, J. Hightower, H. Kautz, and D. Schulz. IROS-03.

Distributed mapping and exploration

This animation shows three robots as they explore the Allen Center. The robots start from different, unknown locations. Initially, each robot explores on its own and estimates where the other robots are relative to its own map (using a particle filter). Once it finds a good hypothesis for another robot's location, it arranges a meeting with this robot. If the meet, the hypothesis is verified, the robots merge their maps, and coordinate their exploration. Go to our mapping web site for more information.

- Centibots: Very large scale distributed robotic teams.

K. Konolige, D. Fox, C. Ortiz, A. Agno, M. Eriksen, B. Limketkai, J. Ko, B. Morisset, D. Schulz, B. Stewart, R. Vincent. ISER-04.

- The Revisiting Problem in Mobile Robot Map Building: A Hierarchical Bayesian Approach.

B. Stewart, J. Ko, D. Fox, and K. Konolige. UAI-03.

- A Hierarchical Bayesian Approach to the Revisiting Problem in Mobile Robot Map Building.

D. Fox, J. Ko, K. Konolige, and B. Stewart. International Symposium on Robotics Research (ISRR), 2003.

- A Practical, Decision-theoretic Approach to Multi-robot Mapping and Exploration.

J. Ko, B. Stewart, D. Fox, K. Konolige, and B. Limketkai. IROS-03

Rao-Blackwellised particle filters for laser-based SLAM

This animation shows Rao-Blackwellised particle filters for map building. The robot trajectories are sampled and, conditioned on each trajectory, a map is built. Shown is the map of the most likely particle only.

- An Efficient FastSLAM Algorithm for Generating Maps of Large-scale Cyclic Environments From Raw Laser Range Measurements.

D. Haehnel, W. Burgard, D. Fox, and S. Thrun. IROS-03.

Estimating transportation routines from GPS

In this project, the particle filter estimates a person's location and mode of transportation (bus, foot, car). A hierarchical dynamic Bayesian network is trained to additionally learn and infer the person's goals and trip segments. The left animation shows a person getting on and off the bus. The color of the particles indicates the current mode of transportation estimate (foot=blue, bus=green, car=red). The middle animation shows the prediction of a person's current goal (black line). Size of blue circles indicates probability of this location being the goal. Right animation shows detection of an error. The person fails to get off the bus at the marked location.

Getting on and off a bus |

Goal prediction |

Error detection |

- Learning and Inferring Transportation Routines.

L. Liao, D. Fox, and H. Kautz. AAAI-04. AAAI Outstanding Paper Award. - Opportunity Knocks: a System to Provide Cognitive Assistance with Transportation Services.

D. J. Patterson, L. Liao, K. Gajos, M. Collier, N. Livic, K. Olson, S. Wang, D. Fox, and H. Kautz. UBICOMP-04.

Landmark-based localization and error recovery

This animation shows how a Sony AIBO robot localizes itself on a robot soccer field. Landmark detections are indicated by colored circles. To demonstrate re-localization capabilities, the robot is picked up and carried to a different location.

- An experimental comparison of localization methods continued.

J.-S. Gutmann and D. Fox. IROS-02.

Adaptive real-time particle filters

Due to their sample-based representation, particle filters are less efficient than, e.g., Kalman filters. Therefore, it can happen that the update rate of the sensor is higher than the update rate of the particle filters. Real-time particle filters deal with this problem by representing the belief by mixtures of sample sets, thereby avoiding the loss of sensor data under limited computational resources. The size of the mixture is adapted to the uncertainty using KLD-sampling (see above).

- Real-time particle filters.

C. Kwok, D. Fox, and M. Meila. Proceedings of the IEEE, 2004. - Real-time particle filters.

C. Kwok, D. Fox, and M. Meila. NIPS-02.

Tracking multiple people using JPDAF particle filters

In this experiment, we apply particle filters to the problem of tracking multiple moving objects. Using its laser range-finders, the robot is able to track a changing number of people even when it is in motion. We address the data association problem by applying joint probabilistic data association filters to particle filters, one for each person.

- People Tracking with a Mobile Robot Using Sample-based Joint Probabilistic Data Association Filters.

D. Schulz, W. Burgard, D. Fox, and A. B. Cremers. International Journal of Robotics Research (IJRR), 22(2), 2003. - Tracking Multiple Moving Objects with a Mobile Robot.

D. Schulz, W. Burgard, D. Fox, and A. B. Cremers. CVPR-01.

Multi-robot localization

This experiment is designed to demonstrate the potential benefits of collaborative multi-robot localization. Our approach makes use of the additional information available when localizing multiple robots by transfering information across different robotic platforms. When one robot detects another, the detection is used to synchronize the individual robots' believes, thereby reducing the uncertainty of both robots during localization. Sample sets are combined using density trees.

The approach was evaluated using our two Pioneer robots Robin and Marian. In this example, Robin performs global localization by moving from left to right in the lower corridor. Marian, the robot in the lab, detects Robin, as it moves through the corridor. As the detection takes place, a new sample set is created, that represents Marian's belief about Robin's position. This sample set is transformed into a density tree which represents the density needed to update Robin's belief. As can be seen in the experiment, the detection event suffices to uniquely determine Robin's position.

The next animation shows collaborative localization using eight robots (simulated data).All robots are equipped with sonar sensors. Whenever a robot detects another one, both robots are enlarged (the detecting robot is larger). Please note how the ambiguities in the belief of the detected robots are resolved.

- A Probabilistic Approach to Collaborative Multi-Robot Localization.

D. Fox, W. Burgard, H. Kruppa, and S. Thrun. Auonomous Robots, 8 (3), 2000.

Vision-based localization

During the museum tour-guide project in the NMAH, Minervawas equipped with a camera pointed towards the ceiling. The figure below shows the ceiling map of the museum. While the contribution of the camera was not essential for the museum project (we mostly relied on laser range-finders), the data collected during the project was used to show the potential benefits of using particle filters in combination with a camera pointed towards the ceiling. The data used here is extremely difficult, as the robot traveled with speeds of up to 163 cm/sec. The experiment shows global localization using the information collected by the camera. The samples are plotted in red and the small yellow circle indicates the true location of the robot.

- Using the Condensation algorithm for robust, vision-based mobile robot localization.

F. Dellaert, W. Burgard, D. Fox, and S. Thrun. CVPR-99.