Energy-Aware Programming Models and Architectures

Energy is increasingly a first-order concern in computer systems design. Battery life is a big constraint in mobile systems, and power/cooling costs largely dominate the cost of equipment in data centers. More fundamentally, current trends point toward a "utilization wall," in which the amount of active die area is limited by how much power can be fed to a chip.

Support for Explicit Runtime Concurrency Errors

This project posits that shared-memory multithreaded concurrency errors should be precisely detected and fail-stop at runtime in the way that segmentation faults or division-by-zero errors are today. We aim to generalize some support for concurrency exceptions throughout the system stack. Specifically, we are investigating the semantics and specification of concurrency exceptions at the language level, their implications in the compiler and runtime systems, how they should be delivered, and how they are enabled by efficient architecture support.

Grappa: Latency Tolerant Distributed Shared Memory

Grappa is a modern take on software distributed shared memory (DSM) for in-memory data-intensive applications (e.g., ad-placement, social network analysis, PageRank, etc.). Grappa enables users to program a cluster as if it were a single, large, non-uniform memory access (NUMA) machine. Performance scales up even for applications that have poor locality and input-dependent load distribution. Grappa addresses deficiencies of previous DSM systems by exploiting abundant application parallelism, often delaying individual tasks to improve overall throughput.

Improving Power Efficiency of Web-Enabled Mobile Devices

With the ubiquity of internet-enabled mobile devices, the nature of software running in mobile devices is changing dramatically. We are exploring several alternatives to improve power efficiency: hardware/software co-design for common/costly operations and parallelism in common applications.

Concurrency Bug Detection and Avoidance

Today's software is broken and unreliable. Software failures pose a significant cost to our economy --- NIST estimates around $60B per year. In addition to financial cost, software failures have more tangible costs in the real world, like the large-scale blackout in the northeastern US in 2003. This project focuses on helping programmers build correct software, and making systems with better reliability in spite of broken software.

Deterministic MultiProcessing (DMP)

One of the main reasons it is difficult to write multithreaded code is that current shared-memory multicore systems can execute code nondeterministically. Each time a multithreaded application runs, it can produce a different output even if supplied with the same input. This frustrates debugging efforts and limits the ability to properly test multithreaded code, major obstacles to the widespread adoption of parallel programming.

OS structures for NVRAM

Mosaic

Coarse-grained reconfigurable architectures (CGRAs) have the potential to offer performance approaching an ASIC with the flexibility, within an application domain, similar to a digital signal processor. In the past, coarse-grained reconfigurable architectures have been encumbered by challenging programming models that are either too far removed from the hardware to offer reasonable performance or that bury the programmer in the minutiae of hardware specification.

Programming Models for Reconfigurable Computing:

This is a new project focused on models for programming large reconfigurable computing platforms. We recently presented our work at the CARL2010 Workshop held at Micro2010.

A Model for Programing Large-Scale Configurable Computing Applications

CARL 2010 Presentation

Architectures for Quantum Computers

Quantum computers seem the subject of science fiction, but their tremendous computational potential is closer than we may think. Despite significant practical difficulties, small quantum devices of 5 to 7 bits have been built in the laboratory. Silicon technologies promise even greater scalability. To use these technologies effectively and help guide quantum device research, computer architects must start designing and reasoning about quantum processors now. However, two major hurdles stand in the way.

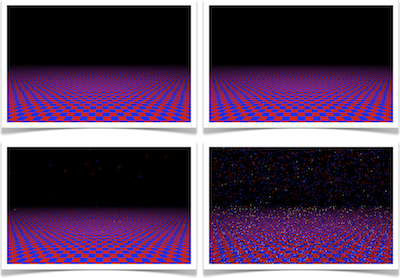

WaveScalar

Silicon technology will continue to provide an exponential increase in the availability of raw transistors. Effectively translating this resource into application performance, however, is an open challenge. Ever increasing wire delay relative to switching speed and the exponential cost of circuit complexity make simply scaling up existing processor designs futile. Our work is an alternative to superscalar design, called WaveScalar. WaveScalar is a dataflow instruction set architecture and execution model designed for scalable, low-complexity/high-performance processors.