Tools and Resource Development

Giving back is important! Here at UW NLP we want researchers everywhere to have access to interesting resources and datasets. We have an indexed version of the Google Syntactic N-gram corpus. We're compiling a large scale activity recognition dataset rooted in linguistic resources (WordNet combined with FrameNet). We're also currently developing an open-source toolkit for text categorization, called ARKcat, that uses Bayesian optimization for tuning hyperparameters.

Multilingual Representations and Parsing

Natural language processing models and tasks, generalized to many languages. We study the relationships between language in order to improve machine translation, and leverage information from English and other high-resource languages in order to improve tasks like multilingual parsing for low-resource languages. Includes projects on multilingual word representations and multilingual parsing.

Social Science Applications: Political Science, Sociology, Psychology & more

Social sciences offer a great range of interesting problems that involves natural language processing. For instance, what does a text tell us about what the author’s intents and biases are? Can we measure how much a therapist empathizes with patients? A wide collaboration net with political scientist, psychologists and other social scientists allows us to tackle these kinds of questions.

Code with Natural Language

Programming using natural language interface can enable novice users to program without having to learn the minute details of the domain specific language. Accomplishing this task requires solving interesting problems in nlp such as parsing, and dialog systems and in mixed-initiative systems such as learning user’s utility model. As a first step towards this goal, we are working on building probabilistic models to parse natural language inputs to conditional (IF-THEN) style programs.

Detecting and Extracting Events

Identifying a wide range of events in free text is an important, open challenge for NLP. Previous approaches to relation extraction have focused on a limited number of relations, typically the static relations found in Wikipedia InfoBoxes, but these methods don't scale to the number and variety of events in Web text and news streams.

We are developing an approach that automatically detects event relations from news streams and learns extractors for those events. Our NewsSpike system leverages properties of news text to learn high-precision event extractors.

Language and Vision

Online data, including the web and social media, presents an unprecedented visual and textual summary of human lives, events, and activities. We --- the UW NLP and Computer Vision groups --- are working to design scalable new machine learning algorithms that can make sense of this ever growing body of information, by automatically inferring the latent semantic correspondences between language, images, videos, and 3D models of the world.

Interactive Learning for Semantic Parsing

Semantic parsers map natural language sentences to rich, logical representations of their underlying meaning. However, to date, they have been developed primarily for natural language database query applications using learning algorithms that require carefully annotated training data. This project aims to learn robust semantic parsers for a number of new application domains, including robotics interfaces and other spoken dialog systems, with little or no manually annotated training data.

Relation and Entity Extraction

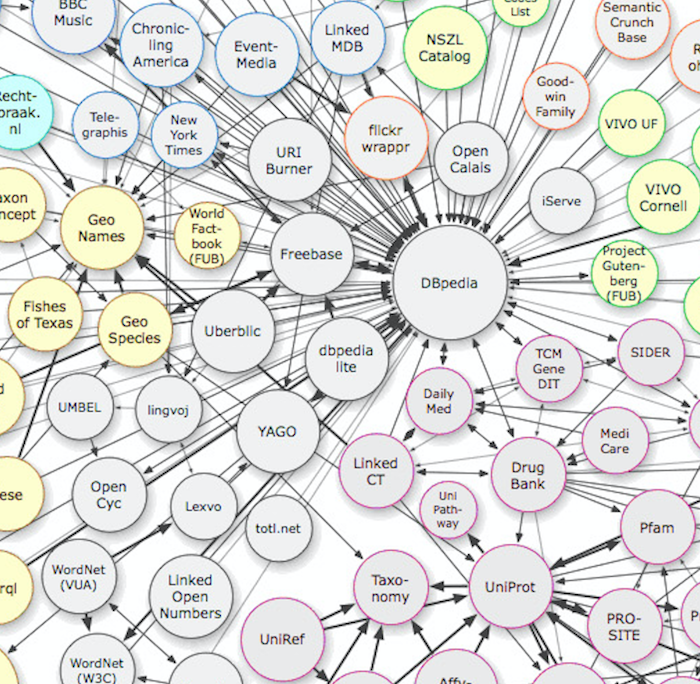

Intelligent Web search and autonomous agents responding to free text need detailed information about the entities mentioned and the relations they participate in. We have developed a number of tools to assign fine-grained entity types, link entities to Freebase, and extract relations between entities, including:

- FIGER fine-grained entity recognizer assigns over 100 semantic types

Language Grounding in Robotics

A number of long-term goals in robotics, for example, using robots in household settings; require robots to interact with humans. In this project, we explore how robots can learn to correlate natural language to the physical world being sensed and manipulated, an area of research that falls under grounded language acquisition.